6500 Supervisor Slots

- The Cisco Catalyst 6500 Series can support up to two supervisor engines (slots 1 and 2 for the Supervisor Engine 1A and Supervisor Engine 2; and slots 1 and 2 in the Cisco Catalyst 6503 and 6504 chassis, slots 5 and 6 in the Cisco Catalyst 6506 and 6509 chassis, and slot 7 and 8 in the Cisco Catalyst 6513 chassis for the Supervisor Engine 32 and Supervisor Engine 720) with one being the running, or active, supervisor engine and the other being the standby supervisor engine.

- Apr 21, 2012 The Supervisor 2T provides 2-terabit system performance for 80Gbps switching capacity per slot on all Catalyst 6500 E-Series Chassis. As a result, you can: As a result, you can: Maintain investment protection through backward compatibility.

The Supervisor 720 contains a 720 gigabit per second crossbar switching fabric that providing for multiple conflict-free (or non blocking) paths between switching modules. At the back of the module you can actually see the chip that is the switching fabric:

Using the “supa klever” form of marketing math, a switching fabric is actually 360 GB/s but because it goes in at 360Gb/s and out at 360Gb/s and therefore you could count it twice. So that is seven hundred and twenty gigabits. That’s the number.

The 6500 Family of Packet-Optical Platforms is designed to address today’s unpredictable traffic patterns by delivering new levels of capacity, flexibility, resiliency, and openness required for a more adaptive network. Jan 19, 2018. Supervisor Engine 2 must be installed in slot 1 or slot 2. Supervisor Engine 32, Supervisor Engine 32 PISA, Supervisor Engine 720, and Supervisor Engine 720-10GE must be installed in slot 5 or slot 6. Note Slots not occupied by supervisor engines can be used for modules. Check your software release notes for any restrictions on the type.

In fact, the switching fabric is eighteen inputs of 20Gb/s per input. Of these, the backplane in your Catalyst 6500 will decide how many are presented to your line cards. The C6503, C6506 and C6509 will present dual fabric connections while the C6513 is dependent on slot.

Per Slot bandwidth

Each slot in the backplane of the C6509-E chassis has two 20Gb/s backplane connections. There are nine slots. Nine slots at 40Gb/s is 360GB/s.

and the Switch Fabric connections look like this:

Catalyst 6513

Note that backplane slots on a 6513 do not support dual 20Gb/s on all slots. Because the switching fabric on the Supervisor has eighteen 20Gb/s inputs, you must layout the inputs differently.

Therefore the slots at the top of 6513 are only 20GB/s and not 40Gb/s. This is what makes the C6513 signficantly different from the C6509 chassis. The 6513 was a popular choice when low performance line cards were used, but today, high density gigabit ethernet is common and much increased bandwidth of servers and desktops †means the C6513 is not commonly used, especially for data centres.

The Switch Fabric connections look like this:

Not All Modules are created equal

Consider the following modules that are commonly used today (2009):

? WS-X6724-SFP: a 24 port Gigabit Ethernet SFP based line card supporting a single 20 Gbps fabric channel to the Supervisor Engine 720 crossbar switch fabric. Also supports an optional Distributed Forwarding Card 3a (DFC3a, DFC3B or DFC3BXL)

? WS-X6748-SFP: a 48 port 1000Mb SFP based line card supporting 2 x 20-Gbps fabric channels to the Supervisor Engine 720 crossbar switch fabric. Also supports an optional Distributed Forwarding Card 3 (DFC3a, DFC3B or DFC3BXL)

? WS-X6704-10GE: a 4 port 10 Gigabit Ethernet Xenpak based line card supporting 2 x 20 Gbps fabric channels to the Supervisor Engine 720 crossbar switch fabric. Also supports an optional Distributed Forwarding Card 3a (DFC3a, DFC3B or DFC3BXL)

? WS-X6708-10GE: an 8 port 10 Gigabit Ethernet X2 optic based line card supporting 2 x 20 Gbps fabric channels to the Supervisor Engine 720 crossbar switch fabric with integrated Distributed Forwarding Card 3CXL.Not this card has 80 Gb/s of input (in one direction, 160 Gb/s in two directions) and could easily oversubscribe the backplane connection and switch fabric connection on those inputs.

An oversubscribed card means that packets may be delayed or dropped under heavy loads.

Cross Bar Connector on the module

You can easily tell if the blade has some form of connection to the Cross Bar fabric by looking at the connectors on the back of the cards. If the Cross Bar Connector is present, then the module has some form of the connection to the fabric.

Shared Bus Connector

There is also a Shared Bus on the C6500 that operates at 32Gb/s (yes, 16Gb/s in both directions).

This is what was used in the early days of the chassis with the first generation C61xx and C63xx modules which could easily oversubscribe the backplane connection. Most new installation are completely fabric switched (excepting for IP Telephony installations who use the oversubscribed model and like the QoS features of the C6500 and the high power density of up to 8500W in a single chassis).

Not Comprehensive

This post is not a comprehensive look at the modules and supervisor architecture. This is covered a number of documents but check the White paper link just below this which has a lot more information and is a complete reference to the architecture of the C6500.

The reason for this article is to outline some of the issues that a Data Centre designer needs to understand. The architecture of the switch, and same logic applies to storage switches, can impact the design of the network. The decision on whether to purchase a WS-X6704-10GE or WS-X6708-10GE can be impacted by the predicted traffic load.

There are other factors that might affect a design, such as the Multicast performance or the QoS features, but this article points out some of the first factors for thinking about whether C6500 is the right switch. I hope you realise that knowing something about the guts of your switch, and how the insides look, is important to getting your network right.

Reference

1) Some images and concepts are drawn from the CISCO CATALYST 6500 SWITCH ARCHITECTURE SESSION RST-4501 from Cisco Networkers in 2004.

2) Cisco Catalyst 6500 Architecture White Paper https://www.cisco.com/en/US/prod/collateral/switches/ps5718/ps708/prod_white_paper0900aecd80673385.html

Cisco announced the Supervisor 2T engine for the Catalyst 6500E chassis a number of weeks back. The Sup2T is a boost to keep the 6500’s legs running a little longer. I think of the 2T as a product enabling customers with a large 6500 investment to put off the inevitable migration to the Nexus platform. The 2T, by all accounts, is the end of the development roadmap for the 6500. My understanding is that the 2T takes the 6500 chassis as far as it can scale in terms of packet forwarding performance.

With the advent of the Nexus 7009, I doubt we’ll see yet another replacement 6500 chassis model (like we saw the “E” some years back). The Nexus uptake has been reasonably good for most Cisco shops, and the Nexus 7009 form factor takes away the physical space challenges faced by those previously considering the 7010 as a forklift upgrade for the widely deployed 6509. In my mind, it makes sense for Cisco to focus their Catalyst development efforts on the 4500 line for access and campus deployments, with Nexus products running NX-OS for core routing services and data center fabric. Could I be wrong? Sure. If Cisco announced a new 6500E “plus” chassis that can scale higher, than that would reflect a customer demand for the product that I personally don’t see happening. Most of the network engineering community is warming up to the Nexus gear and NX-OS.

That baseline established, Cisco is selling the Sup2T today. What does it bring to the table? Note that anything in italics is lifted directly from the Cisco architecture document referenced below in the “Links” section.

- Two Terabit (2080 Gbps) crossbar switch fabric. That’s where the “2T” comes from. These sups are allowing for forwarding performance up to 2 Tbps. Of course, as with previous supervisor engines, the aggregate throughput of the chassis depends on what line cards you deploy in the chassis. That old WS-X6148A you bought several years ago isn’t imbued with magical forwarding powers just because you pop a 2T into the chassis.

- The Supervisor 2T is designed to operate in any E-Series 6500 chassis. The Supervisor 2T will not be supported in any of the earlier non E-Series chassis. You know that non-E 6500 chassis running Sup720s you love so much? Gotta go if you want to upgrade to a 2T (to which I ask the question if you’re considering this…why not Nexus 7009 instead?)

- As far as power requirements, note the following:

- The 6503-E requires a 1400 W power supply and the 6504-E requires a 2700 W power supply, when a Supervisor 2T is used in each chassis.

- While the 2500 W power supply is the minimum-sized power supply that must be used for a 6, 9, and 13-slot chassis supporting Supervisor 2T, the current supported minimum shipping power supply is 3000 W.

- Line cards are going to bite you; backwards compatibility is not what it once was. There’s a lot of requirements here, so take note.

- The Supervisor 2T provides backward compatibility with the existing WS-X6700 Series Linecards, as well as select WS-X6100 Series Linecards only.

- All WS-X67xx Linecards equipped with the Central Forwarding Card (CFC) are supported in a Supervisor 2T system, and will function in centralized CEF720 mode.

- Any existing WS-X67xx Linecards can be upgraded by removing their existing CFC or DFC3x and replacing it with a new DFC4 or DFC4XL. They will then be operationally equivalent to the WS-X68xx linecards but will maintain their WS-X67xx identification.

- There is no support for the WS-X62xx, WS-X63xx, WS-X64xx, or WS-X65xx Linecards.

- Due to compatibility issues, the WS-X6708-10GE-3C/3CXL cannot be inserted in a Supervisor 2T system, and must be upgraded to the new WS-X6908-10GE-2T/2TXL.

- The Supervisor 2T Linecard support also introduces the new WS-X6900 Series Linecards. These support dual 40 Gbps fabric channel connections, and operate in distributed dCEF2T mode.

To summarize thus far, a legacy 6500 chassis will need to be upgraded to a 6500E. Many older series line cards are not supported at all, or will require a DFC upgrade. Power supplies are a consideration, although the base requirements are not egregious. Therefore, moving to a 2T will require a good bit of technical and budgetary planning to get into a Sup2T. I suspect that for the majority of customers, this will not be a simple supervisor engine swap.

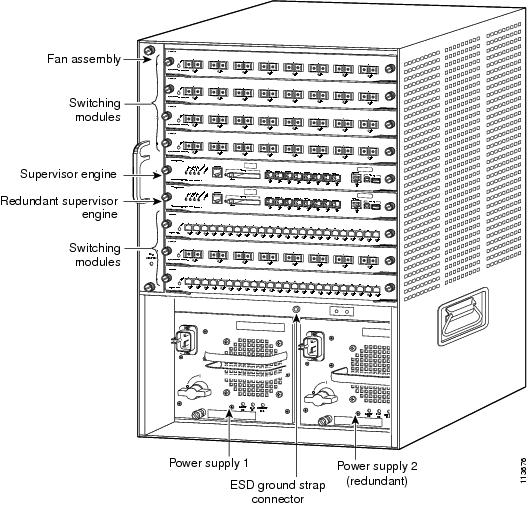

This diagram from Cisco shows the hardware layout of the Sup2T, focusing on all the major junction points a packet or frame could crossed through depending on ingress point, required processing, and egress point.

There are two main connectors here to what Cisco identifies as two distinct backplanes: the fabric connector, and the shared bus connector. The fabric connector provides the high-speed connectors for the newer line cards, such as the new 6900 series with the dual 40Gbps connections mentioned above. The shared bus connector supports legacy cards (sometimes referred to as “classic” cards), that is linecards with no fabric connection, but rather connections to a bus shared with similarly capable cards.

6500 Supervisor Slots Games

The crossbar switch fabric is where the throughput scaling comes from. Notice that Cisco states there are “26 x 40” fabric channels in the diagram. That equates to the 2080Gbps Cisco’s talking about. The crossbar switch fabric on the Supervisor 2T provides 2080 Gbps of switching capacity. This capacity is based on the use of 26 fabric channels that are used to provision data paths to each slot in the chassis. Each fabric channel can operate at either 40 Gbps or 20 Gbps, depending on the inserted linecard. The capacity of the switch fabric is calculated as follows: 26 x 40 Gbps = 1040 Gbps; 1040 Gbps x 2 (full duplex) = 2080 Gbps.

“Full-duplex” means that what we’re really getting is 1Tbps in one direction, and 1Tbps in the other direction. The marketing folks are using weasel words to say that the Sup2T is providing a 2 terabit fabric. This marketing technique is neither new nor uncommon in the industry when describing speeds and feeds, but it is something to keep in mind in whiteboard sessions, especially if you’re planning a large deployment with specific data rate forwarding requirements.

Now here’s a strange bit. While the crossbar fabric throughput is described in the context of full-duplex, the 80Gbps per-slot is not.The 80 Gbps per slot nomenclature represents 2 x 40 Gbps fabric channels that are assigned to each slot providing for 80 Gbps per slot in total. If marketing math were used for this per slot capacity, one could argue that the E-Series chassis provides 160 Gbps per slot.

Moving onto the control-plane functions of the Sup2T, we run into the new MSFC5. The MSFC5 CPU handles Layer 2 and Layer 3 control plane processes, such as the routing protocols, management protocols like SNMP and SYSLOG, and Layer 2 protocols (such as Spanning Tree, Cisco Discovery Protocol, and others), the switch console, and more. The MSFC5 is not compatible with any other supervisor. The architecture is different from previous MSFC’s, in that while previous MSFC’s sported a route processor and a switch processor, the MSFC5 combines these functions into a single CPU.

The diagram also show a “CMP”, which is a feature enhancement of merit. The CMP is the “Connectivity Management Processor,” and seems to function like an iLO port. Even if the route processor is down on the Sup2T, you can still access the system remotely via the CMP. The CMP is a stand-alone CPU that the administrator can use to perform a variety of remote management services. Examples of how the CMP can be used include: system recovery of the control plane; system resets and reboots; and the copying of IOS image files should the primary IOS image be corrupted or deleted. Implicitly, you will have deployed an out-of-band network or other remote management solution to be able to access the CMP, but the CMP enhances our ability to recover a borked 6500 from far away.

The PFC4/DFC4 comprise the next major component of the Sup2T. The PFC4 rides as a daughter card on the supervisor, and is the hardware slingshot that forwards data through the switch. The DFC4 performs the same functions only it rides on a linecard, keeping forwarding functions local to the linecard, as opposed to passing it through the fabric up to the PFC4.

The majority of packets and frames transiting the switch are going to be handled by the PFC, including IPv4 unicast/multicast, IPv6 unicast/multicast, Multi-Protocol Label Switching (MPLS), and Layer 2 packets. The PFC4 also performs in hardware a number of other functions that could impact how a packet is fowarded. This includes, but is not limited to, the processing of security Access Control Lists (ACLs), applying rate limiting policies, quality of service classification and marking, NetFlow flow collection and flow statistics creation, EtherChannel load balancing, packet rewrite lookup, and packet rewrite statistics collection.

The PFC performs a large array of functions in hardware, including the following list I’m lifting from Cisco’s architecture whitepaper.

6500 Supervisor Slots Download

- Layer 2 functions:

- Increased MAC Address Support – a 128 K MAC address table is standard.

- A bridge domain is a new concept that has been introduced with PFC4. A bridge domain is used to help scale traditional VLANs, as well as to scale internal Layer 2 forwarding within the switch.

- The PFC4 introduces the concept of a Logical Interface (LIF), which is a hardware-independent interface (or port) reference index associated with all frames entering the forwarding engine.

- Improved EtherChannel Hash – etherchannel groups with odd numbers of members will see a better distribution across links.

- VSS support – it appears you can build a virtual switching system right out of the box with the Sup2T. There does not seem to be a unique “VSS model” like in the Sup720 family.

- Per Port-Per-VLAN – this feature is designed for Metro Ethernet deployments where policies based on both per-port and per- VLAN need to be deployed.

- Layer 3 functions. There’s a lot here, and rather than try to describe them all, I’m just going to hit the feature names here, grouped by category. You can read in more detail in the architecture document I link to below.

- Performance: Increased Layer 3 Forwarding Performance

- IPv6: uRPF for IPv6, Tunnel Source Address Sharing, IPv6 Tunnelling

- MPLS/WAN: VPLS, MPLS over GRE, MPLS Tunnel Modes, Increased Support for Ethernet over MPLS Tunnels, MPLS Aggregate Label Support, Layer 2 Over GRE

- Multicast: PIM Register Encapsulation/De-Encapsulation for IPv4 and IPv6, IGMPv3/MLDv2 Snooping

- Netflow: Increased Support for NetFlow Entries, Improved NetFlow Hash, Egress NetFlow, Sampled NetFlow, MPLS NetFlow, Layer 2 Netflow, Flexible NetFlow

- QoS: Distributed Policing, DSCP Mutation, Aggregate Policers, Microflow Policers

- Security: Cisco TrustSec (CTS), Role-Based ACL, Layer 2 ACL, ACL Dry Run, ACL Hitless Commit, Layer 2 + Layer 3 + Layer 4 ACL, Classification Enhancements, Per Protocol Drop (IPv4, IPv6, MPLS), Increase in ACL Label Support, Increase in ACL TCAM Capacity, Source MAC + IP Binding, Drop on Source MAC Miss, RPF Check Interfaces, RPF Checks for IP Multicast Packets

So, do you upgrade to a Sup2T? It depends. The question comes down to what you need more: speed or features. The Sup2T is extending the life of the 6500E chassis with speed and a boatload of features. That said, you can’t scale the 6500 to the sort of 10Gbps port density you can a Nexus. Besides, most of the features found on a 6500 aren’t going to be used by most customers. If your 6500 is positioned as a core switch, then what you really need is the core functionality of L2 and L3 forwarding to be performed as quickly as possible with minimal downtime. To me, the place to go next is the Nexus line if that description of “core” is your greatest need.

If instead you need a super-rich feature set, then the question is harder to answer. The Nexus has a ways to go before offering all of the features the Catalyst does. That’s not to say that all a Nexus offers is throughput. True, NX-OS lacks the maturity of IOS, but it offers stability better than IOS-SX and features that most customers need.

In some ways, I’m making an unfair comparison. Nexus7K and Cat6500 have different purposes, and solve different problems. But for most customers, I think either platform could meet the needs. So if you’re looking for a chassis you can leave in the rack for a very long time, it’s time to look seriously at Nexus, rejecting it only if there’s some specific function it lacks that you require. If the Nexus platform can’t solve all of your problems, then you probably have requirements that are different from merely “going faster”. The 6500/Sup2T may make sense for you.